- OpenAI: ChatGPT Atlas (Oct 21, 2025)

OpenAI introduces their new agentic browser, Atlas. Be wary of AI browsers as exfiltration of personal data is a real concern. - Ed Zitron: This Is How Much Anthropic and Cursor Spend On Amazon Web Services (Oct 20, 2025)

Ever the contrarian, Zitron points out that Anthropic’s outlays are in excess of revenue. Is this sustainable forever? No. Can they operate like this for the next 3-5 years? Without a doubt. - Simon Willison: Unseeable prompt injections in screenshots: more vulnerabilities in Comet and other AI browsers (Oct 21, 2025)

“The ease with which attacks like this can be demonstrated helps explain why I remain deeply skeptical of the browser agents category as a whole.” - WSJ: Gas Turbine Makers Are Riding the AI Power Boom (Oct 10, 2025)

Gas turbine manufacturers are experiencing a surge in demand due to increased power needs from data centers and AI growth but face the challenge of balancing production increases to meet demand without risking oversupply if the boom subsides, drawing parallels to past market bubbles. - WSJ: A Giant New AI Data Center Is Coming to the Epicenter of America’s Fracking Boom (Oct 15, 2025)

CoreWeave and Poolside are partnering to build a massive, self-powered data center complex, called Horizon, on a sprawling ranch in West Texas, leveraging natural gas resources to reduce costs and improve long-term viability. Considering that 700 million cubic feet of natural gas is jettisoned each day in Texas, this seems like a smart play to be so close to spare hydrocarbons. - WSJ: Oracle Co-CEOs Defend Massive Data-Center Expansion, Plan to Offer AI Ecosystem (Oct 14, 2025)

Concerns remain about Oracle’s reliance on OpenAI and the profitability of its AI infrastructure build-out. $300B is a big number, even for a company of Oracle’s size. - WSJ: Google to Invest $24 Billion in AI in U.S., India (Oct 13, 2025)

Google plans to invest approximately $15 billion in India over the next five years with another $9B for expanding a data center in South Carolina.

Blog

-

Tuesday Links (Oct. 21)

-

Monday Links (Oct. 20)

- Anthropic: Claude Code on the web (Oct 20, 2025)

A new feature allowing users to delegate coding tasks directly from their browser to cloud-based Claude instances. This enables parallel execution of tasks like bug fixes and routine changes, with real-time progress tracking, secure sandboxing, and integration with GitHub for automatic PR creation. - Ed Zitron: This Is How Much Anthropic and Cursor Spend On Amazon Web Services (Oct 20, 2025)

Ever the contrarian, Zitron points out that Anthropic’s outlays are in excess of revenue. Is this sustainable forever? No. Can they operate like this for the next 3-5 years? Without a doubt. - Simon Willison’s Weblog: Claude Skills are awesome, maybe a bigger deal than MCP (Oct 16, 2025)

A new method for enhancing Claude’s abilities by providing users with folders containing instructions, scripts, and resources that Claude can load when needed. - NY Times: How Chile Embodies A.I.’s No-Win Politics (Oct 20, 2025)

Chile is grappling with trade-offs of investing in AI, facing a dilemma between fostering economic growth and risking environmental damage and public opposition due to the resource-intensive data centers required. I would also add that the cost of AI data centers is also prohibitive for many countries today. - The Independent: Oversharing with AI: How your ChatGPT conversations could be used against you (Oct 19, 2025)

Intimate chat history is vulnerable to exploitation by law enforcement, criminals, and tech companies for targeted advertising, raising privacy concerns with limited legal protections. - WSJ: OpenAI’s Chip Strategy: Pair Nvidia’s Chocolate With Broadcom’s Peanut Butter (Oct 17, 2025)

OpenAI seeks to diversify chip procurement to meet the growing computing demands of its AI services.

- Anthropic: Claude Code on the web (Oct 20, 2025)

-

Is AI Development Slowing?

Just a few months ago, it felt like the prevailing narrative was the incredible and unstoppable rise of AI. Reporters left and right were profiling the site AI2027, a techno-optimist forecast of AI’s trajectory over the next 2–3 years. But since then, I’ve noticed a rising number of more pessimistic stories — ones that talk about social and interpersonal risks, financial peril, and the idea that the development of AI technology is slowing. While the first two concerns are worth considering, today we’ll focus on the idea that AI development is slowing.

For those of you with kids, you’ll likely remember the days when they were babies, and each day seemed to bring some new incredible skill. Sitting up, crawling, talking, walking, and learning to open every cabinet door in the kitchen. It was hard to miss the almost daily changes. Family members would visit and note the changes, and as a parent, you would readily agree. The child in question inevitably had doubled in size in less than a year. But as they grew, development seemed to slow, making visiting family members the only ones to be amazed by a child’s growth. Their absence allowed them to see the remarkable change. “Wow,” they would say, “little Johnny has truly gotten big.”

I see the same with AI development today.

Models introduced last year and even earlier this year had a feeling of novelty, of magic. For many of us (yours truly included), it was an experience to see that AI tools had personality and possible utility for the first time. The examples: help me solve a problem, answer a question, clean up some writing, write a piece of code, etc. It was like watching an infant grow into someone who could talk.

Perhaps more akin to elementary-age children, the pace of change for AI tools doesn’t feel as fast for many folks. The WSJ (and others) are publishing articles like “AI’s Big Leaps Are Slowing—That Could Be a Good Thing” that frame the AI story as a slowdown. But those headlines usually track product launches, not capability evolution. But I don’t see much evidence that product launches are slowing (I can count scores of product launches just in the past few months). I see it more along the lines that people came to believe AGI would mature more quickly than even the industry leaders claimed.

It’s like Bill Gates’ maxim: “We always overestimate the change that will occur in the next two years and underestimate the change that will occur in the next ten.”

The Nielsen Norman Group has tracked this shift in users. As conversational AI becomes the baseline, search behaviors evolve. Queries are less about “find me a link” and more about iterating with an AI assistant. In their follow-up on user perceptions, people described new agent features as “expected” rather than “wow” (NNG, 2025). The bar has moved. Our expectations have flattened because most people don’t see those agentic and long-horizon use gains. They see new AI features, feel underwhelmed, and assume the hype was overblown.

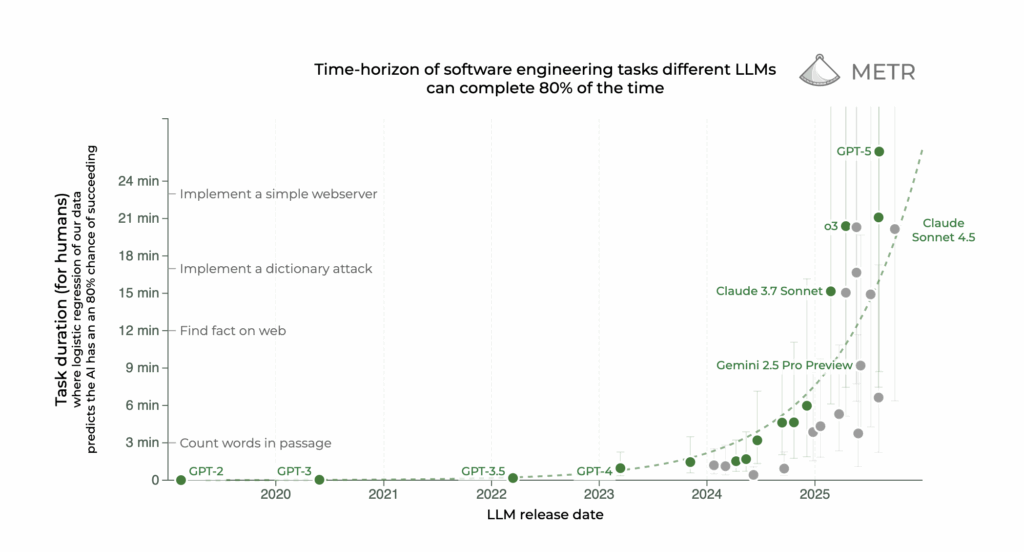

Earlier this year, METR published research showing that models are increasingly capable of long-horizon tasks, executing sequences of operations with minimal oversight. They have since updated their report with data inclusive of more recent models.

That’s an exponential curve, not something you’d expect with stagnation. Meanwhile, on the macroeconomic stage, activity hasn’t slowed. AI investment is still surging, with economists crediting the technology for meaningful boosts to growth. There are mixed reports about adoptions: Apollo Academy reports a cooling in AI adoption rates among large corporations—even as internal development ramps up. But AI coding tool installation continues to rise. Tracking the number of installs of the top 4 AI coding tools, you’ll find a nearly 20% increase in daily installations over the past 30 days.

Back to AI 2027, the predictions about agentic AI in late 2027 seem to be more or less on pace, with perhaps a month or so of deviation. The risk of all of this is to mistake familiarity for maturity. The awe has worn off, so it’s easy to assume the growth has too. But if you look at what METR’s testing shows, how users are integrating AI without fanfare, how developers are integrating AI tools into their work, and how capital is still flowing—the picture is clear. Progress remains swift.

⸻

AI development isn’t truly slowing—it’s maturing. As the initial novelty wears off, real advances continue beneath the surface, driven by capability gains, steady investment, and evolving user behavior.

-

Work Life Balance

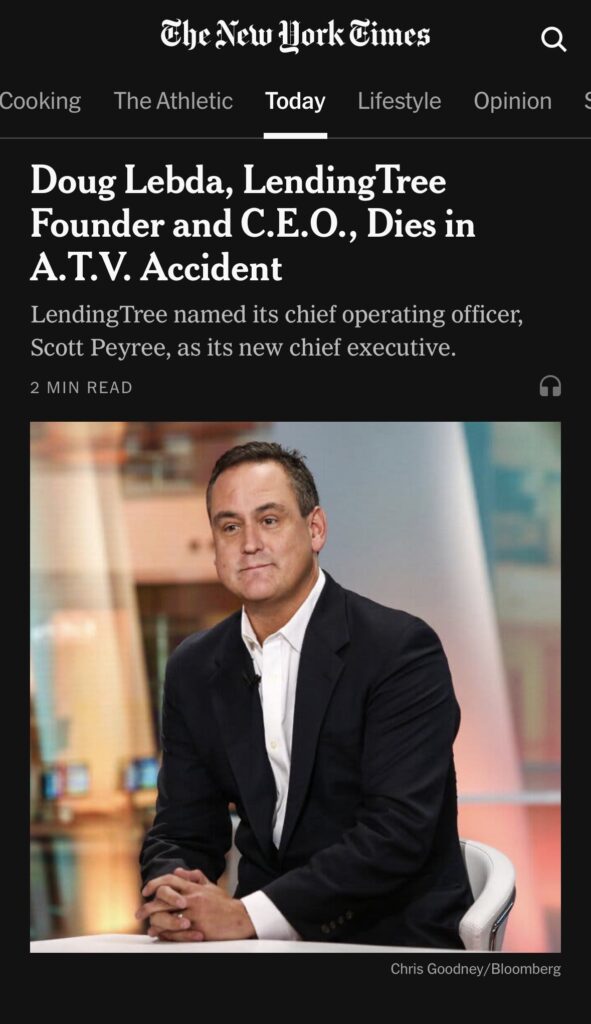

Yesterday, I saw this NYTimes story about LendingTree’s CEO sudden and accidental death.

He was the founder and longtime CEO of the company. He was a multimillionaire and a vital part of the company’s leadership team. Yet the lede of the story implied how irreplaceable he was:

LendingTree named its chief operating officer, Scott Peyree, as its new chief.

So yes, folks, even the most important of corporate officers can be replaced mere hours after an accident. It reminds me that the most important things in life happen not at work (where you can be replaced at will) but at home and with your family. I doubt that his wife has already named his replacement.

-

Friday Links (Oct. 10)

- Maginative: Figma taps Google’s Gemini for Faster, Enterprise-Ready AI Inside its Design Platform (Oct 9, 2025)

Integrations will enhance image generation and editing within Figma and help with enterprise governance, allowing admins to control AI feature access and data usage for model training. - WSJ: Exclusive | Microsoft Tries to Catch Up in AI With Healthcare Push, Harvard Deal (Oct 8, 2025)

Microsoft aims to become a leading AI chatbot provider, reducing its reliance on OpenAI by focusing on healthcare applications for its Copilot assistant. This update, developed in collaboration with Harvard Medical School, will offer more credible health information, and Microsoft is developing tools to help users find healthcare providers. - Google: Introducing the Gemini 2.5 Computer Use model (Oct 7, 2025)

The new model empowers agents to interact directly with user interfaces for tasks like filling forms and navigating web pages. And the possibilities are immense, but software testing seems like a great candidate for tools like this. - NY Times: What the Arrival of A.I. Video Generators Like Sora Means for Us (Oct 9, 2025)

Sora has become so realistic that it undermines the reliability of video as proof of events. It’s simply difficult to distinguish between real and fake videos. - WSJ Opinion: AI and the Fountain of Youth (Oct 8, 2025)

AI is accelerating drug development, analyzing medical data, and improving diagnostics, potentially leading to longer, healthier lives. “Thanks to AI, the process of identifying and developing new drugs, once a decade long slog, is being compressed into months.” - WSJ Opinion: I’ve Seen How AI ‘Thinks.’ I Wish Everyone Could. (Oct 9, 2025)

Understanding how AI models function, including their training data and mathematical structure, is crucial, especially as AI increasingly impacts human endeavors like writing and art. - WSJ: AI Investors Are Chasing a Big Prize. Here’s What Can Go Wrong. (Oct 5, 2025)

Investing in AI is risky due to the high costs, uncertain timelines, and potential for competition. I’d argue that these risks are present in almost any investment decision.

- Maginative: Figma taps Google’s Gemini for Faster, Enterprise-Ready AI Inside its Design Platform (Oct 9, 2025)

-

Thursday Links (Oct. 9)

- Simon Willison: gpt-image-1-mini (Oct 6, 2025)

OpenAI quietly released gpt-image-1-mini, a smaller and cheaper image generation model. The results are impressive and inexpensive, as mere pennies net good results. - Simon Willison: GPT-5 Pro (Oct 6, 2025)

With a September 30, 2024 knowledge cutoff and a 400,000-token context window. - Sam Altman: Sora update #1 (Oct 3, 2025)

OpenAI is planning two major changes to Sora based on user feedback: providing rightsholders with more granular control over the use of their characters in generated videos, and implementing a revenue-sharing model with rightsholders for video generation. - Morningstar, Inc.: The AI bubble is 17 times the size of the dot-com frenzy – and four times subprime, this analyst argues (Oct 3, 2025)

MacroStrategy Partnership argues the AI bubble is significantly larger than both the dot-com and 2008 real estate bubbles. This claim, of course, is only proved after the “bubble” pops, so like most economic forecasting, only time will tell. It does seem quite clear that investment in AI infrastructure is high. - Stratechery: OpenAI’s Windows Play (Oct 7, 2025)

OpenAI has made a flurry of announcements, including partnerships with Oracle, Nvidia, Samsung, SK Hynix, and AMD, as well as new product offerings like Instant Checkout and Sora 2. These moves suggest that OpenAI is positioning itself to be the “Windows of AI” by creating a platform where applications reside within ChatGPT, similar to how Windows became the dominant PC operating system. - Alex Tabarrok: The ai Boom (Oct 5, 2025)

Anguilla’s internet domain, .ai, is experiencing a massive surge in registrations due to the booming interest in artificial intelligence. This increase in .ai domain registrations has become a major source of income for the small island nation, now contributing nearly half of its state revenues. - The Register: McKinsey wonders how to sell AI apps with no measurable benefits (Oct 9, 2025)

Vendors should demonstrate clear value to line-of-business decision-makers who are increasingly weighing AI investments against staffing costs. Hand-waving and chanting “AI” is not a good strategy to explain rising costs. - WSJ: Elon Musk Gambles Billions in Memphis to Catch Up on AI (Oct 5, 2025)

xAI is investing heavily in the Memphis area, building massive data centers powered by a new power plant to support its chatbot Grok.

- Simon Willison: gpt-image-1-mini (Oct 6, 2025)

-

Tuesday Links (Oct. 7)

- WSJ: OpenAI, AMD Announce Massive Computing Deal, Marking New Phase of AI Boom (Oct 6, 2025)

OpenAI to purchase 6GW of AMD chips and take up to 10% equity stake in AMD, a huge win for AMD as they battle dominant AI chipmaker, Nvidia. - WSJ: Anthropic and IBM Partner in Bid for AI Business Customers (Oct 7, 2025)

Anthropic and IBM are partnering to integrate Anthropic’s Claude AI models into IBM’s software. “Kareem Yusuf, IBM’s senior vice president of ecosystem and strategic partners, said the Armonk, N.Y.-based company initiated the partnership after seeing how well Anthropic’s models performed on its own benchmarks, and recognizing they shared a focus on corporate customers.” - NY Times: Recruiters Use A.I. to Scan Résumés. Applicants Are Trying to Trick It. (Oct 7, 2025)

Job seekers are increasingly using hidden instructions in their résumés to manipulate AI screening tools, as “[R]oughly 90 percent of employers now use A.I. to filter or rank résumés.” - NY Times: Elon Musk Gambles on Sexy A.I. Companions (Oct 6, 2025)

xAI launched two sexually explicit chatbots to engage users with increasingly raunchy content as they progress through conversation levels. What could go wrong with this!? - Axios: The biggest sign yet of an AI bubble is starting to appear (Oct 3, 2025)

AI tech companies are leveraging debt, sometimes hidden through private lenders and special purpose vehicles, to fund their AI infrastructure buildout. - NY Times: We Finally Have Free Anti-Robocall Tools That Work (Oct 2, 2025)

iOS 26 features a new call screening technology by using AI tools to ask the caller their name and the reason for the call. I enabled this personally last week. Zero robocallers since then.

- WSJ: OpenAI, AMD Announce Massive Computing Deal, Marking New Phase of AI Boom (Oct 6, 2025)

-

Friday Links (Oct. 3)

- Scott Aaronson: The QMA Singularity (Sep 27, 2025)

“I had tried similar problems a year ago, with the then-new GPT reasoning models, but I didn’t get results that were nearly as good. Now, in September 2025, I’m here to tell you that AI has finally come for what my experience tells me is the most quintessentially human of all human intellectual activities: namely, proving oracle separations between quantum complexity classes.” - WSJ: Walmart CEO Issues Wake-Up Call: ‘AI Is Going to Change Literally Every Job’ (Sep 26, 2025)

“It’s very clear that AI is going to change literally every job.” Doug McMillon, Walmart CEO - WSJ: Why Meta Thinks It Can Challenge Apple in Consumer AI Devices (Sep 26, 2025)

“But Meta is playing a long game with a very ambitious target. The company apparently believes that Apple’s dominance of consumer devices is vulnerable in the AI age.” - Simon Willison’s Weblog: ForcedLeak: AI Agent risks exposed in Salesforce AgentForce (Sep 26, 2025)

Security researchers at Noma Security discovered a vulnerability in Salesforce AgentForce that allowed for the exfiltration of lead data. The vulnerability was made possible due to an expired domain still being whitelisted in Salesforce’s Content Security Policy (CSP). Salesforce has since plugged the exploit. - NY Times: Apple’s New AirPods Offer Impressive Language Translation (Sep 18, 2025)

The new AirPods Pro 3 feature real-time language translation using AI, allowing users to understand conversations in different languages, offering a seamless and practical way to improve communication for travelers and immigrants. - AP News: Judge approves $1.5 billion copyright settlement between AI company Anthropic and authors (Sep 25, 2025)

A federal judge has preliminarily approved a $1.5 billion settlement between Anthropic and authors who accused the AI company of illegally using nearly half a million pirated books to train its chatbots, with authors and publishers potentially receiving about $3,000 per book. - Semafor: Anthropic irks White House with limits on models’ use (Sep 17, 2025)

“Anthropic recently declined requests by contractors working with federal law enforcement agencies because the company refuses to make an exception allowing its AI tools to be used for some tasks, including surveillance of US citizens.” - WSJ: Spending on AI Is at Epic Levels. Will It Ever Pay Off? (Sep 25, 2025)

“The artificial-intelligence boom has ushered in one of the costliest building sprees in world history.” Historically, this feels akin to the massive railroad boom in the 19th century (and perhaps the massive bust that followed). - NY Times: A.I. Could Make the Smartphone Passé. What Comes Next? (Sep 8, 2025)

Some predict that AI assistants will become the central operating system of personal computing. - NY Times: How ‘Clanker’ Became an Anti-A.I. Rallying Cry (Aug 31, 2025)

Clanker, a term from Star Wars, has grown more popular in online discussions as a derogatory slur against AI and robots, fueled by frustrations over AI-generated content, job automation, and the increasingly human-like qualities of AI.

- Scott Aaronson: The QMA Singularity (Sep 27, 2025)

-

AI Pricing Trends

When Disney launched Disney+ in 2020, it came to market with a really low price: $6.99 per month. The strategy was obvious—use a bargain price to quickly build a subscriber base and compete with Netflix.

It worked. Families eagerly added Disney+ to their lineup of streaming services, drawn by its deep library of shows and movies. But as the platform grew, investors started pushing Disney to make the service profitable. Over the next few years, Disney steadily raised prices. Today, the ad-free tier costs nearly three times what it did at launch.

This story isn’t just about streaming. It’s a preview of what’s coming with AI services.

⸻

The $20 Benchmark for AI

When OpenAI launched ChatGPT Plus at $20 a month, it wasn’t the product of intricate economic modeling. Instead, it reflected an attempt to recoup some of the enormous costs behind the scenes—training models, running massive server farms, and paying world-class researchers.

That $20 price point quickly became the de facto benchmark for consumer-facing AI tools, with Anthropic and others adopting similar rates.

But OpenAI has been clear that its ambitions go far beyond the current offering. Its Stargate initiative involves building massive infrastructure, partnering globally, and spending billions on data centers. At some point, investor money won’t be enough—they’ll need sustainable revenue.

And just like Disney, the path is clear: grow the subscriber base, then gradually raise prices once users view the product as indispensable.

⸻

The Coming Price Climb

Right now, $20 a month feels reasonable. But look ahead 5–10 years. As AI capabilities expand, it’s easy to imagine prices climbing to $50, $60, or even $100 per month.

For individual consumers, that may be tough to swallow. A household with multiple subscriptions could find itself spending several hundred dollars a month on AI tools.

For businesses, however, the math looks different. If AI can make an employee just 10% more productive, the return on investment is obvious. An employee earning $60,000 annually who produces the equivalent of $66,000 in value thanks to AI easily justifies a $100 subscription. For programmers or knowledge workers achieving productivity gains of 50% or more, companies might begrudgingly pay hundreds—even thousands—per employee, per month.

The economics are compelling, and the pressure to raise prices is certain.

⸻

AI-Adjacent Tools Will Follow

This dynamic won’t be limited to large language models. AI-adjacent tools—platforms like Jira or Siteimprove—are racing to integrate AI features into their products. The added capabilities will deepen customer reliance. But once the early adoption phase passes, I expect these vendors to raise prices as well.

It’s the same playbook: demonstrate new value, increase lock-in, then adjust pricing upward.

⸻

The Staffing Equation

All of this has implications beyond budgets. If AI makes employees 50% more efficient, organizations will rethink staffing structures. Efficiency gains don’t automatically translate into cost savings unless roles are consolidated or organizations grow.

Take three departments, each with a similar role. If AI tools boost each person’s productivity by 50%, the organization suddenly has capacity for 4.5 units of work when only three are needed. The logical response is to reduce headcount—perhaps to two positions covering all three departments. But that requires some degree of centralization to realize these gains. Better options include company growth or redeploying personnel in areas of need or opportunity. There will be disruption of the workforce, but it doesn’t have to lead to layoffs.

⸻

Planning for the Future

The lesson from Disney+ is clear: early low prices are temporary. AI services are following the same trajectory, and organizations should prepare now.

- Expect rising subscription costs—both for core AI platforms and for AI-enhanced tools.

- Budget for increases of 50% or more annually over the next few years.

- Plan staffing structures to capture the efficiency gains AI makes possible. I’m sure companies will prefer growth and redeployment, but that’s not always assured.

AI will reshape productivity in profound ways. But as with streaming, the honeymoon pricing phase won’t last forever.

-

Friday Links

- Cloudflare Introduces NET Dollar (Sep. 25, 2025)

Cloudflare plans to launch NET Dollar, a USD-backed stablecoin, to facilitate instant and secure transactions for AI agents. It will enable microtransactions and rewarding creators, developers, and AI companies for unique content and valuable contributions, ultimately fostering a more open and sustainable internet economy. - Simon Willison: GitHub Copilot CLI is now in public preview (Sep 25, 2025)

With options for GitHub Models, Claude Sonnet 4, or GPT-5, the tool integrates with existing GitHub Copilot accounts for billing. - Simon Willison: gpt-5 and gpt-5-mini rate limit updates (Sep 12, 2025)

OpenAI increased rate limits for GPT-5 and GPT-5-mini across various usage tiers, allowing for more tokens per minute, putting OpenAI ahead of Anthropic but lagging Gemini. - European Digital Rights (EDRi): Chat Control: What is actually going on? (Sep 24, 2025)

A contentious EU proposal dubbed “Chat Control,” a draft Child Sexual Abuse (CSA) Regulation, would mandate scanning private messages—even in end-to-end encrypted services—using AI filters. Critics argue it amounts to mass surveillance and undermines privacy. The proposal faces legal and political hurdles, including resistance from the EU Parliament and some member states. - Google: The Digital Markets Act: time for a reset (Sep 25, 2025)

Alongside Apple, Google is also advocating for the EU’s DMA to be replaced with something that causes less unintended harm to users and businesses. - WSJ: The City Leading China’s Charge to Pull Ahead in AI (Sep 12, 2025)

Hangzhou, China, is emerging as a key AI hub driven by supportive government policies, established tech companies like Alibaba, the new DeepSeek model, and a growing pool of talent. This transformation highlights China’s ambition to lead in AI technology development. - Ars Technica: Apple demands EU repeal the Digital Markets Act (Sep 25, 2025)

Aside from the fact that the article title is an absurd overstatement, the core idea is true: Apple is lobbying for the EU’s DMA to be scrapped and replaced, arguing it has made it too hard to do business and innovate in Europe. - WSJ: AI Agents Are Getting Ready to Handle Your Whole Financial Life (Sep 17, 2025)

As usual, concerns about risks and regulations exist, but financial institutions are developing AI tools that can analyze portfolios, execute trades, and provide financial advice, potentially democratizing access to financial expertise.

- Cloudflare Introduces NET Dollar (Sep. 25, 2025)