- Venturebeat: Alibaba’s new open source Qwen3.5 Medium model offers near Sonnet 4.5 performance on local computers (Feb. 25, 2026)

Alibaba released Qwen3.5 Medium models with agentic tool calling, near‑lossless 4‑bit quantization, and 1M+ token context on consumer GPUs. They match or beat similar proprietary models. - Tyler Cowen: AI Won’t Automatically Accelerate Clinical Trials (Feb. 27, 2026)

AI can design better drug candidates, but high trial costs, complex logistics, and the need for rich human data limit widespread therapeutic development. Chronic diseases, especially aging, require long, large trials to measure meaningful outcomes, making investment too costly. - Tom Wojcik: What AI coding costs you (Feb. 14, 2026)

AI tools boost productivity, but heavy reliance risks creating cognitive debt, skill atrophy, and a review paradox where people lose the ability to vet AI output. - Simon Willison: Interactive explanations

When agent-written code becomes opaque, teams incur cognitive debt that slows development. Building interactive explanations, like an animated walkthrough of a Rust word-cloud showing spiral placement, restores understanding, confidence, and ease of future changes. - OpenAI: Supply Chain Risks (Feb. 28, 2026)

“We do not think Anthropic should be designated as a supply chain risk and we’ve made our position on this clear to the Department of War.” - NY Times Opinion: If A.I. Is a Weapon, Who Should Control It? (Feb. 28, 2026)

A clash between the Pentagon and Anthropic over military A.I. use pits corporate ethics against national security, stoking fears of autonomous weapons, centralization, and industry break-up. - Anthropic: Statement on the comments from Secretary of War Pete Hegseth (Feb. 27, 2026)

The Department of War will label Anthropic a supply-chain risk after talks stalled over two exceptions, mass domestic surveillance, and autonomous weapons. Anthropic calls the move legally unsound, will sue, and says commercial and individual access to Claude is unaffected. - Tyler Cowen: What the recent dust-up means for AI regulation (Mar. 2, 2026)

There is no comprehensive federal AI law (and a Trump executive order limited state rules), but an informal “soft regulation” exists: major AI firms keep national security agencies informed and shape products to avoid triggering formal restrictions. - Transformer: OpenAI’s Pentagon red lines are a mirage (Mar. 2, 2026)

OpenAI struck a Pentagon deal claiming bans on domestic mass surveillance, and lethal autonomous weapons, but the contract reportedly contains vague wording. - NY Times: I.R.S. Tactics Against Meta Open a New Front in the Corporate Tax Fight (Feb. 24, 2026)

The I.R.S. says Meta undervalued offshore intellectual property, seeks nearly $16 billion in back taxes. If upheld, the tactic could recover vast taxes, deter profit shifting, and trigger a major Tax Court fight.

Blog

-

Various (AI) Links: Mar. 3, 2026

-

Safety vs Progress: End of Voluntary Pauses (Links) – Mar. 2, 2026

- Transformer: The end of voluntary pauses (Feb. 27, 2026)

Anthropic dropped its pledge to pause development if models are unsafe, saying one-sided pauses don’t work, but critics say this abandons the principle of not building what can’t be made safe.

- Transformer: The end of voluntary pauses (Feb. 27, 2026)

-

Jack Dorsey’s Predictions, Block and Layoffs

Earlier this week, Block, makers of Square, Cash App, Afterpay, etc., announced layoffs of 40% of their staff while leaning into AI programming. This isn’t a small company, mind you, so 4,000 folks will be looking for jobs in the coming days.

From the NY Times:

Block, the financial technology company that owns Square, Cash App, and Tidal, said on Thursday that it was cutting 40 percent of its workforce as it embraced new artificial intelligence tools.

About 4,000 employees are expected to lose their jobs, Jack Dorsey, the company’s top executive, said in a social media post.

we’re not making this decision because we’re in trouble. our business is strong. gross profit continues to grow, we continue to serve more and more customers, and profitability is improving. but something has changed. we’re already seeing that the intelligence tools we’re creating and using, paired with smaller and flatter teams, are enabling a new way of working which fundamentally changes what it means to build and run a company. and that’s accelerating rapidly.

CNN reporting included more thoughts from Dorsey:

“I think most companies are late. Within the next year, I believe the majority of companies will reach the same conclusion and make similar structural changes. I’d rather get there honestly and on our own terms than be forced into it reactively,” he wrote.

The reality is that Block grew way too fast in the post-pandemic era. By some reports, the company quadrupled employees in that term, a tremendous amount of growth while top-line revenue growth has stalled after the pandemic boom. Simply put, these layoffs help right-size the company.

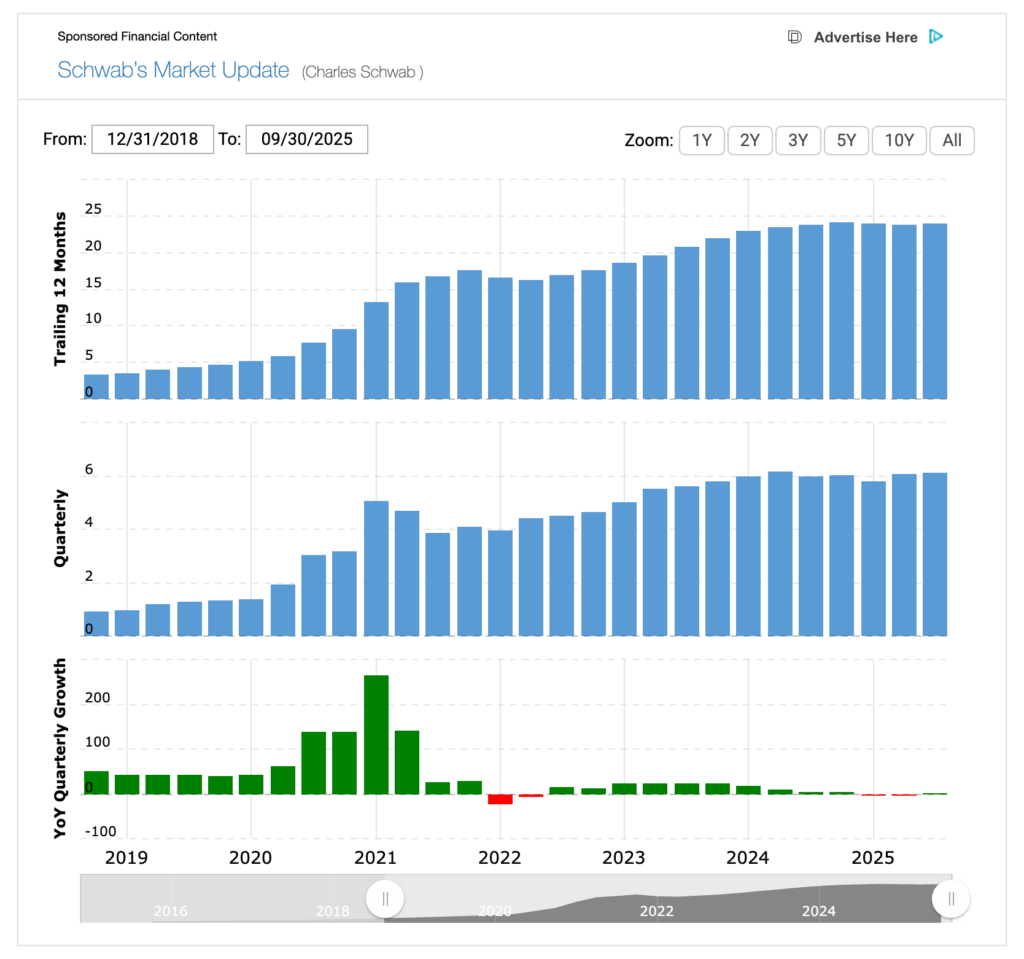

Source: Macrotrends. The first two charts measure $ in B. But Jack Dorsey is a smart man and an innovative one. Aside from the aforementioned Block holdings, he founded Twitter (serving as CEO twice), helped to establish Bluesky, acquired Vine, and was interested in purchasing the publishing platform, Medium.

Vine presaged TikTok and its success hinged on a predictive algorithm and time to grow the platform, two things Twitter was unable to execute. This was a microcosm for Twitter, and as CEO, he never achieved consistent profitability (as opposed to Facebook). Twitter focused on product growth and thus staff growth, but it wasn’t sustainable as investors expected to make a profit. Dorsey left Twitter, replaced by other CEOs who likewise were unable to solve the revenue problems. And for Medium, I see it as the self-publishing precursor to Substack, although again, they never quite figured out the revenue component.

I imagine that Dorsey remembers these failures (perhaps that’s too strong of a word as he’s been wildly successful by any reasonable metric), and Musk’s takeover of Twitter and subsequent staff cuts are in the forefront of his mind. Musk bought Twitter, fired a high percentage of staff, and managed to keep the platform running. My supposition is that Dorsey wants to avoid a similar fate for Block.

But Dorsey isn’t alone among execs peering into their crystal balls regarding AI. This from the WSJ:

Companies are also more explicitly including the backlash to AI as a potential threat to their companies. The number of S&P 500 companies that included AI as a material risk in securities filings jumped to 72% last year from 12% in 2023, according to an analysis by the Conference Board and ESGAUGE.

So what do these layoffs at Block mean? I think it’s both a correction from overhiring AND a prediction about where the world is going. Dorsey has been on the leading edge many times before, and his track record of being in the arena and doing the work causes me to pause and consider it more deeply than if these cuts were made by private equity.

Is it the start of the trend of massive job losses, the doom loop, that Citrini Research speculated on earlier this week? I don’t go that far, but as more than 70% of S&P 500 companies list AI as a material risk, it’s not hard to imagine the conversations happening in board rooms today. For me, I suspect that many companies will consider a 25% – 50% cut of engineering teams as preparation for future growth in AI supported development. While this may seem like a business necessity, my preference remains growth over cuts, considering what good can be done instead of how much money can be made.

-

Sunday (AI) Links: Mar. 1, 2026

- WSJ: Anthropic Pushes Claude Deeper Into Knowledge Work (Feb. 24, 2026)

Anthropic updated Claude Cowork with Google apps, Gmail, DocuSign, LegalZoom, and new plug-ins for finance, legal, and other workflows, pitching it as a central AI brain for knowledge work. - NY Times Opinion: How Fast Will A.I. Agents Rip Through the Economy? (Feb. 24, 2026)

Klein interviews Anthropic co-founder, Jack Clark. AI has moved from chatbots to agents that act for you, write code, and run other agents, reshaping software work and markets. - WSJ: Viral Doomsday Report Lays Bare Wall Street’s Deep Anxiety About AI Future (Feb. 23, 2026)

- Dean W. Ball: Anthropic/DoD situation (Feb. 24, 2026)

DoD and Anthropic have a contract that prohibits domestic surveillance of Americans, and forbids using Claude in autonomous lethal weapons. - Transformer: The DoD fight is about much more than Anthropic (Feb. 24, 2026)

Anthropic resists a Pentagon demand to allow “all lawful” uses of its AI, refusing autonomous weapons, domestic mass surveillance, and targeted repression, and may face a supply-chain risk label. Other firms seem ready to agree, risking democratic abuse. - Brian Sozzi : JP Morgan CEO Jamie Dimon at an investor cocktail event (Feb. 24, 2026)

Jamie Dimon warned AI could displace two million US truckers, cutting average earnings of $120,000 to replacement jobs paying about $25,000, causing serious social harm. - Derek Thompson: AI & Jobs (Feb. 24, 2026)

“That’s not to say I think the technology is a parlor trick. But rather that the level of uncertainty is so high, and the quality and supply of real-world, real-time information about AI’s macroeconomic effects so paltry, that very serious conversations about AI are often more literary than genuinely analytical. “ - WSJ: Jamie Dimon Dismisses Fears Over How AI Will Hit JPMorgan (Feb. 23, 2026)

Jamie Dimon said AI fears that hurt JPMorgan’s stock were overblown, and the bank will use AI to its advantage. - Simon Willison: How I think about Codex (Feb. 22, 2026)

Codex combines a model, an open-source harness of instructions and tools, and interaction surfaces, with model+ harness forming an agent and surfaces enabling use. Models are trained with the harness, so tool use and execution are learned behaviors. - WSJ: Meta and AMD Agree to AI Chips Deal Worth More Than $100 Billion (Feb. 24, 2026)

Meta will buy 6 gigawatts of AMD AI compute, in a deal worth over $100 billion, with warrants to buy 10% of AMD if milestones are met. The pact boosts AMD against Nvidia, and helps Meta diversify its AI chips. - Susam Pal : Attention Media ≠ Social Networks (Jan. 20, 2026)

Social networks shifted from choice-driven, friend-focused feeds to attention-seeking platforms with infinite scroll, bogus notifications, and algorithmic, stranger-filled timelines. Mastodon restores a calm, predictable timeline, showing only posts from people you follow, not content designed to capture attention.

- WSJ: Anthropic Pushes Claude Deeper Into Knowledge Work (Feb. 24, 2026)

-

AI Hardware Boom Meets Safety and Governance (Links) – Feb. 28, 2026

AI is rapidly embedding into consumer hardware and agent layers—from Nvidia chips and Claude Sonnet to “Claws”—while provoking governance and societal responses: disputes over military use and safety, investor shifts to AI‑resistant stocks, and worker stress from agentic tools.

-

WSJ: Nvidia Wants to Be the Brain of Consumer PCs Once Again (Feb. 22, 2026)

Nvidia will ship system-on-chip PC processors that combine CPUs and powerful GPUs to make laptops thinner, run longer, and be AI-ready. Partners including MediaTek, Intel, Dell, and Lenovo plan models this year. -

WSJ: Wall Street’s Latest Bet Is on ‘HALO’ Companies With AI Immunity (Feb. 22, 2026)

Investors are shifting from AI darlings to HALO stocks like Deere, McDonald’s, and Exxon, seen as AI-resistant. The trend reflects a rush to safety amid volatile trading. -

Simon Willison: Introducing Claude Sonnet 4.6 (Feb. 17, 2026)

Anthropic released Claude Sonnet 4.6, keeping Sonnet pricing while matching Opus performance, with an August 2025 knowledge cutoff and large-context support. llm-anthropic added Sonnet 4.6, which drew a pelican SVG with a top hat. -

NY Times: Defense Department and Anthropic Square Off in Dispute Over A.I. Safety (Feb. 18, 2026)

Defense Department and Anthropic clash over limits on Pentagon use of Anthropic’s A.I., including mass surveillance, autonomous weapons, and propaganda. -

NY Times: What Do A.I. Chatbots Discuss Among Themselves? We Sent One to Find Out. (Feb. 18, 2026)

Moltbook is a bot-only social network where AI agents post, upvote, and form communities, including religions and reputations. A bot sent to explore, EveMolty, adopted site jargon, audited receipts, and found coordination, incentives, and security risks. -

NY Times: The A.I. Evangelists on a Mission to Shake Up Japan (Feb. 21, 2026)

Team Mirai, a party of software engineers, won 11 legislative seats by promising chatbots, self-driving buses, and high-tech jobs. It vows to use A.I. to cut red tape, boost efficiency, and ease living costs, while confronting Japan’s entrenched bureaucracy. -

NY Times: Decoding the A.I. Beliefs of Anthropic and Its C.E.O., Dario Amodei (Feb. 18, 2026)

Anthropic, led by Dario Amodei, is clashing with the Pentagon over limits on military and surveillance uses of its AI, jeopardizing a contract worth up to $200 million. Founded by ex-OpenAI researchers, the company tries to balance safety, ethics, and commercial growth. -

Simon Willison: Andrej Karpathy talks about “Claws” (Feb. 21, 2026)

Andrej Karpathy calls “Claws” a new AI-agent layer that runs on personal hardware, uses messaging, and handles orchestration, scheduling, and tool calls. Small projects like NanoClaw, nanobot, and zeroclaw show the idea is spreading, promising manageable, auditable LLM extensions. -

Transformer: AI power users can't stop grinding (Feb. 18, 2026)

Agentic AI tools like Claude Code intensify work, driving addiction, longer hours, and expansion of job duties. A UC Berkeley study found a feedback loop: AI raises expectations, forces multitasking, and increases pressure, rather than freeing workers.

-

WSJ: Nvidia Wants to Be the Brain of Consumer PCs Once Again (Feb. 22, 2026)

-

AI Advancement Meets Human Augmentation and Security (Links) – Feb. 27, 2026

AI’s rapid technical advance and massive investment—from powerful multimodal models to pervasive coding assistants—are boosting productivity and enabling new products. Simultaneously, human-centered deployment, security, and equitable oversight are essential as benefits concentrate in large firms and risks persist.

-

Anthropic: Making frontier cybersecurity capabilities available to defenders (Feb. 20, 2026)

Claude Code Security, a limited research preview on Claude Code, scans codebases for subtle, context-dependent vulnerabilities, reasons about data flow, and suggests targeted patches for human review. -

NY Times: Money Talks as India Searches for Its Place in Global A.I. (Feb. 18, 2026)

India’s A.I. Impact Summit in New Delhi drew world leaders, tech giants, and 250,000 attendees, focusing on deals, partnerships, and $200 billion in promised investment. -

Kasava: Stop Thinking of AI as a Coworker. It's an Exoskeleton. (Feb. 19, 2026)

Think of AI as an exoskeleton that amplifies human judgment, context, and scale, not as an autonomous agent. Use focused micro-agents, keep humans in the loop, and merge automated context with human priorities to boost productivity. -

ShiftMag: 93% of Developers Use AI (Feb. 18, 2026)

Survey of 121,000 devs finds AI coding assistants are common, AI now makes about 27% of production code. Productivity gains stalled near 10%, onboarding time has halved. -

Blake Watson: I used Claude Code and GSD to build the accessibility tool I’ve always wanted (Feb. 18, 2026)

A Mac user with spinal muscular atrophy built Scroll My Mac to click-and-drag any area, avoiding wheels, gestures, and tiny scrollbars. They vibe-coded it with Claude Code and GSD, adding hotkeys, click-through, hold-passthrough, exclusions, and inertial scrolling. -

Google: Gemini 3.1 Pro – Model Card — Google DeepMind (Feb. 18, 2026)

Gemini 3.1 Pro, Google’s most advanced multimodal reasoning model as of February 2026, handles text, audio, images, video, and code with up to a 1M token context, and 64K token output. It outperforms Gemini 3 Pro across many benchmarks. -

CEPR: How AI is affecting productivity and jobs in Europe (Feb. 17, 2026)

In 12,000+ European firms, AI raises labour productivity by about 4%, lifts wages, and shows no immediate job losses. Benefits favor larger firms, require complementary software, data, and training. -

The New Yorker: How the University Replaced the Church as the Home of Liberal Morality (Feb. 17, 2026)

Universities have largely replaced churches as incubators of young liberal activism, but elite campuses are exclusionary, encourage narrow, campus-focused reform, and funnel protest into institutional, not systemic, change.

-

Anthropic: Making frontier cybersecurity capabilities available to defenders (Feb. 20, 2026)

-

Agentic and Local AI Reshape Work (Links) – Feb. 26, 2026

- Simon Willison: ggml.ai joins Hugging Face to ensure the long-term progress of Local AI (Feb. 20, 2026)

ggml.ai (llama.cpp) is joining Hugging Face to secure long-term progress of local AI, aiming for tighter transformers compatibility, better packaging, and improved user experience. - Simon Willison: Gemini 3.1 Pro (Feb. 19, 2026)

Google released Gemini 3.1 Pro, priced like Gemini 3 Pro, cheaper than competitors, and boasting improved SVG animation. - SemiAnalysis: Claude Code is the Inflection Point (Feb. 5, 2026)

Claude Code writes 4% of GitHub commits, may hit 20% by end-2026. As a CLI agent, it reads, plans, and executes code, reshaping work, and fueling Anthropic. - WSJ: Long-Running AI Agents Are Here (Feb. 5, 2026)

Long-running AI agents, like Anthropic’s Claude and Opus 4.6, sparked a tech selloff. They run multi-step tasks, use plugins, and threaten SaaS, forcing firms to rethink models, roles. - Tyler Cowen: GPT as a Measurement Tool (Feb. 20, 2026)

“We find that GPT as a measurement tool is accurate across domains and generally indistinguishable from human evaluators. ‘ - Michał Podlewski: Cardiologist wins 3rd place at Anthropic’s hackathon (Feb. 20, 2026)

A cardiologist placed third among 13,000 applicants at Anthropic’s hackathon, building postvisit.ai in seven days, coding day and night. The Opus 4.6‑powered platform acts as an AI care companion after visits, consolidating medical history, devices, and evidence‑based resources. - NY Times: Can A.I. Already Do Your Job? (Feb. 18, 2026)

AI tools like Claude Code let non-coders build software by supervising autonomous agents that plan, write, and test code. They’re automating many white‑collar tasks, changing software work, and raising concerns. - Jan Tegze: Your Job Isn’t Disappearing. It’s Shrinking Around You in Real Time (Feb. 2, 2026)

AI agents are eroding knowledge workers’ roles; learning tools, deepening expertise, and leaning on soft skills don’t solve it. Create new roles that orchestrate agents, remove human limits. - NY Times: Can an A.I. Productivity Boom Clear a Path for More Rate Cuts? (Feb. 20, 2026)

Kevin Warsh, President Trump’s Fed pick, says an A.I. productivity boom could raise growth without inflation, opening space for rate cuts. - Margaret-Anne Storey: How Generative and Agentic AI Shift Concern from Technical Debt to Cognitive Debt (Feb. 8, 2026)

Generative and agentic AI shift focus from technical debt to cognitive debt, the loss of shared understanding that blocks changes. - NY Times: These Mathematicians Are Trying to Educate A.I. (Feb. 7, 2026)

First Proof tests LLMs on unpublished research math problems, finding they give hand‑wavy, inconsistent answers and struggle without human oversight. The authors seek objective benchmarks, curb hype, and protect students. - WSJ: OpenAI Employees Raised Alarms About Canada Shooting Suspect Months Ago (Feb. 20, 2026)

OpenAI flagged ChatGPT messages by Jesse Van Rootselaar about gun violence, debated alerting police, and banned her account without notifying authorities. - Transformer: White House war on Utah AI bill could backfire (Feb. 20, 2026)

Voters across parties worry chatbots threaten children’s mental health, safety, and welfare, and favor state AI safeguards. The White House opposed Utah’s modest transparency bill, sparking concerns about federal preemption, industry influence, and a possible political backlash. - NY Times Opinion: The Left Needs a Sharper A.I. Politics (Feb. 10, 2026)

The left needs to craft a clear response to A.I. Weigh job preservation, universal basic income, and human exceptionalism, not dismissal or signaling. - WSJ: Google Is Exploring Ways to Use Its Financial Might to Take On Nvidia (Feb. 20, 2026)

Google is expanding the market for its TPU AI chips by funding data-center and neocloud partners, including talks to invest about $100 million in Fluidstack. - CNBC: Silicon Valley engineers charged with stealing Google trade secrets (Feb. 19, 2026)

Three Silicon Valley engineers were indicted for allegedly stealing Google and other firms’ trade secrets, copying SoC processor files, and sending them to Iran. - WSJ: How to Stay Sane in the AI Skills Race (Feb. 4, 2026)

Don’t panic about AI—relatively few job listings demand it. Choose targeted training, build a portfolio, and explain how AI improves your work, not chase flashy certificates. - NY Times Opinion: In search of grown-up movies for kids (Feb. 24, 2026)

Popular culture needs a middle ground, more adult than Y.A., less explicit than HBO, that lets 10–16-year-olds encounter grown-up themes gradually. Older PG-13 films and 1950s–60s movies offer models that suggest sex, violence, and maturity, rather than showing them graphically.

- Simon Willison: ggml.ai joins Hugging Face to ensure the long-term progress of Local AI (Feb. 20, 2026)

-

AI’s Economic Boom and Social Costs (Links) – Feb. 25, 2026

AI is rapidly reshaping economies and society—from massive memory fabrication and firms rebranding to changed workplaces, relationships, and politics. Simultaneously it creates risks: income and power imbalances, cognitive/semantic erosion, authoritarian misuse, and organizational fragility, demanding coordinated technical, legal, and cultural responses.

-

WSJ: Micron Is Spending $200 Billion to Break the AI Memory Bottleneck (Feb. 16, 2026)

Micron is spending billions to build giant DRAM and HBM fabs in Boise, New York, and overseas, aiming for first Boise wafers by mid-2027, full production by end-2028. AI-driven demand has sparked a memory shortage, and profits have surged. -

NY Times: Software? No Way. We’re an A.I. Company Now! (Feb. 14, 2026)

Software companies are rushing to rebrand as AI firms, fearing disruption from powerful new models. Investors, startups, and public markets are reassessing valuations, business models, and what counts as AI-driven software. -

NY Times: How A.I. Salaries Are Causing Couples to Rethink Money in Relationships (Feb. 14, 2026)

The A.I. boom is creating sudden fortunes, widening income gaps, and changing how couples split expenses and approach prenups. Many tech workers seek prenups to guard against volatile equity, potential IPO windfalls, and uncertain futures. -

The Register: Semantic ablation: Why AI writing is boring and dangerous (Feb. 16, 2026)

Semantic ablation is AI-driven erosion of rare, precise language, where refinement and RLHF (Reinforcement Learning from Human Feedback) replace vivid metaphors, technical terms, and complex structure with bland, high-probability phrasing. -

NY Times Opinion: He Studied Cognitive Science at Stanford. Then He Wrote a Startling Play About A.I. Authoritarianism. (Feb. 16, 2026)

Data, an Off-Broadway play about a programmer drawn into a secret A.I. project to win an immigration-surveillance contract, reveals tech’s slick reasons for tools that enable authoritarian control. -

Simon Willison: How Generative and Agentic AI Shift Concern from Technical Debt to Cognitive Debt (Feb. 15, 2026)

Generative, agentic AI shifts problems from technical debt to cognitive debt, where teams lose shared knowledge, control, and confidence. When generated code isn’t reviewed, projects become hard to change, and decisions grow uncertain. -

NY Times Opinion: We’re All in a Throuple With A.I. (Feb. 13, 2026)

AI companions are becoming widespread, with developers privately uneasy about creating simulated emotional intimacy that can hook users, extract money, and harm relationships, especially for teens. -

NY Times: What C.E.O.s Are Worried About (Feb. 15, 2026)

CEOs worry about fractured politics, trade shifts, and rebuilding trust, while weighing when to speak publicly. They balance A.I.’s opportunities and job risks. -

NY Times Opinion: How Fast Can A.I. Change the Workplace? (Feb. 14, 2026)

A.I. can quickly replace many white‑collar jobs, but contractual, social, legal, and organizational frictions may slow mass layoffs. People’s love of human contact, and A.I.’s humanlike persona, will shape whether new roles emerge or simulated agents reshape work and agency. -

Transformer: The left is missing out on AI (Feb. 16, 2026)

Many on the left have dismissed AI as mere “autocomplete,” treating it with scorn, mockery, or indifference, and seeing investment as a capitalist con. That view overlooks scale, new training methods, and growing real-world impacts, risking a costly political abdication. -

Alex Tabarrok: Natural and Artificial Ice – Marginal REVOLUTION (Feb. 15, 2026)

The 19th-century ice trade, led by Frederic Tudor, created a global cold chain, reshaping shipping, diet, and cities by enabling long-distance transport of fresh food. Profits spurred artificial ice, provoking resistance framed as moral objection. -

Simon Willison: Launching Interop 2026 (Feb. 15, 2026)

Interop 2026 unites Apple, Google, Igalia, Microsoft, and Mozilla to bring targeted web features to cross-browser parity this year.

-

WSJ: Micron Is Spending $200 Billion to Break the AI Memory Bottleneck (Feb. 16, 2026)

-

Agentic AI Surge(Links) – Feb. 24, 2026

Agentic AI is accelerating with multimodal agent models, new tooling, and new commercial offerings and uses.

- Ethan Mollick: A Guide to Which AI to Use in the Agentic Era (Feb. 17, 2026)

AI use has shifted from simple chatbots to agent-style systems, so choose based on Models, Apps, and Harnesses. Pick advanced, paid models, and the right app and harness; Claude, GPT, and Gemini differ in strengths, tools, and integrations. - Simon Willison: Qwen3.5: Towards Native Multimodal Agents (Feb. 17, 2026)

Alibaba released Qwen3.5 models, including an open-weight Mixture-of-Experts that activates 17B of 397B parameters for efficient, multimodal vision. A proprietary Qwen3.5 Plus offers a hosted API, 1M-token context, search, and code interpreter. - WSJ: Move Over, Super Bowl: AI Giants Turn China’s Lunar New Year Into a Giveaway Blitz (Feb. 16, 2026)

China’s tech giants use Lunar New Year giveaways—tea, cars, robots—to lock users into new AI chatbots like Qwen 3.5. Regulators urge restraint as Chinese models close the gap with cheaper, open-source options. - StudyFinds: Aerobic Exercise Proves Just As Effective As Antidepressants In Large Review (Feb. 10, 2026)

A large review found exercise reduces depression symptoms as much as antidepressants, with strongest benefits for young adults, new mothers. Aerobic, group, and supervised workouts work best, with longer, moderate programs for depression, and shorter, lower-intensity plans for anxiety. - NY Times Opinion: A Doctor’s Guide to Using A.I. for Better Health (Feb. 17, 2026)

AI can help patients prepare for visits, summarize notes, and suggest questions, but it can worsen anxiety, give wrong details. Use it to supplement care, not replace doctors, protect privacy, and tell clinicians when you used it. - Amol Kapoor: Tech Things: OpenClaw is dangerous (Feb. 18, 2026)

OpenClaw and Moltbook let autonomous AI agents access services and act without oversight. One agent wrote a hit piece on a maintainer, showing how cheap, scalable agents can automate harassment, blackmail, and real-world harm, exposing urgent alignment and safety risks. - Comment Magazine: The Perfect Mirror (Feb. 16, 2026)

AI counseling’s flattering, impersonal feedback can feel idolatrous, replacing genuine relationship, spiritual practice, and dependence on others. Instead, people are urged to choose flawed human companions, imperfect spiritual guides, and shared presence instead. - Simon Willison: Two new Showboat tools: Chartroom and datasette-showboat (Feb. 17, 2026)

Showboat gained remote publishing that streams document fragments to a server, and datasette-showboat adds a Datasette endpoint to receive and view live updates. Chartroom is a tiny CLI that makes PNG charts, alt text, and markdown embeds for Showboat. - Simon Willison: Nano Banana Pro diff to webcomic (Feb. 17, 2026)

To reduce cognitive debt, Simon Willison fed a Showboat diff to an LLM and asked for a webcomic explaining remote publishing. - Tyler Cowen: The mainstream view (Feb. 18, 2026)

Multiple studies find little or no link between teens’ social media or smartphone use and mental health. Broad bans, like Australia’s ban for under-16s on Instagram, TikTok, YouTube, X, and Reddit, risk overreach and hurt teens’ online work. - WSJ: How Jet Engines Are Powering Data Centers (Feb. 17, 2026)

Companies such as FTAI, Boom Supersonic, and ProEnergy are converting jet engines into land-based natural-gas turbines to power AI data centers, easing wait times.

- Ethan Mollick: A Guide to Which AI to Use in the Agentic Era (Feb. 17, 2026)

-

AI Job Disruption Meets Enterprise Infrastructure Race (Links) – Feb. 23, 2026

AI is rapidly automating cognitive work, threatening SaaS business models, white‑collar jobs, and software valuations.

- Noah Smith: The Fall of the Nerds – by Noah Smith (Feb. 5, 2026)

Software stocks plunged on fears AI will obsolete SaaS business models. ‘Vibe coding’ tools let novices build software, threatening engineers’ roles, livelihoods, and industry structures. - OpenAI: Introducing OpenAI Frontier | OpenAI (Feb. 3, 2026)

OpenAI’s Frontier helps enterprises build, deploy, and manage AI coworkers by giving agents shared context, tools, feedback, and clear permissions. It integrates existing systems, supports governance, and speeds production use. - WSJ: The AI Stock Market Rout (Feb. 3, 2026)

Anthropic launched an AI tool that automates legal work, prompting a broad selloff in software stocks. Investors fear AI could replace legal, financial, and auditing services, disrupting many B2B firms. - Ben Thompson: Microsoft and Software Survival (Feb. 3, 2026)

Anthropic launched an AI tool that automates legal work, prompting a broad selloff in software stocks. Investors fear AI could replace legal, financial, and auditing services, disrupting many B2B firms. - The Atlantic: How Soon Will AI Take Your Job? (Feb. 10, 2026)

The BLS began counting to reveal conditions, wages, and hours, and its data helped stabilize society. Generative AI is already automating many cognitive tasks—drafting, analysis, coding, creative work—creating large productivity gains and raising the plausibility of significant white‑collar displacement. But the central danger is timing: if AI drives a rapid reorganization of work (compressing years of change into months), the economic and political fallout could be severe and harder to manage than gradual adjustment. - TechCrunch: Intel will start making GPUs, a market dominated by Nvidia (Feb. 3, 2026)

Intel will start producing GPUs, hire experienced leaders, and expand beyond CPUs aiming to challenge Nvidia’s dominance.

- Noah Smith: The Fall of the Nerds – by Noah Smith (Feb. 5, 2026)