I love the quote from George Mallory about climbing Mt. Everest:

When asked by a reporter why he wanted to climb Everest, Mallory purportedly replied, “Because it’s there.”

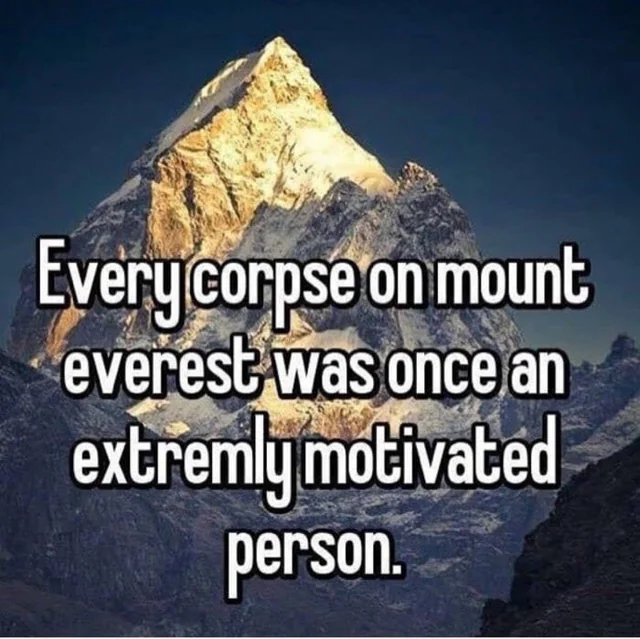

We all know that it didn’t turn out so well for Mr. Mallory, and 100 years later, this meme:

Perhaps the same can be said for AI scientists: why do you build even more powerful AI systems? Because the challenge is there!

The race to build these systems is on. Companies left and right are dropping millions on talent in their attempt to build superintelligence labs. Meta, for example, has committed millions and millions to this effort. OpenAI (the leader), Anthropic (the safety-minded one), xAI (the rebel), Mistral (the Europeans), DeepSeek (the Chinese), Meta, and others are building frontier AI tools. Many are quite indistinguishable from magic.

Each of these companies purports to be the best and the most trustworthy organization to get to superintelligence for one reason or another. Elon Musk (xAI), for example, has been quite clear that he only trusts the technology if he controls it. He even attempted a long shot bid to purchase OpenAI earlier this year. Anthropic is quite overtly committed to safety and ethics, believing they are the company best-suited to develop “safe” AI tools.

(Anthropic founders Dario and Daniela Amodei and others left OpenAI in 2021 in response to concerns about AI safety. They focused on so-called responsible AI development as central to all research and product work. Of course, their AI ethics didn’t necessarily extend to traditional ethics like not stealing, but that’s a conversation for another day.)

I’m not here to pick on the Amodeis, Musk, Meta, or any of the AI players. It’s clear that they’ve created amazing technologies with considerable utility. But there are concerns at a far higher level than AI-induced psychosis on an individual level or pirating books.

Ezra Klein recently interviewed Eliezer Yudkowsky on his podcast, another bonkers interview that positions AI not as just another technology but as something with a high probability of leading to human extinction.

The interview is informative and interesting, and if you have an hour, it’s worth listening to in its entirety. But I was particularly struck by the religious and metaphysical part of the conversation:

Klein:

But from another perspective, if you go back to these original drives, I’m actually, in a fairly intelligent way, trying to maintain some fidelity to them. I have a drive to reproduce, which creates a drive to be attractive to other people…

Yudkowsky:

You check in with your other humans. You don’t check in with the thing that actually built you, natural selection. It runs much, much slower than you. Its thought processes are alien to you. It doesn’t even really want things the way you think of wanting them. It, to you, is a very deep alien.

…

Let me speak for a moment on behalf of natural selection: Ezra, you have ended up very misaligned to my purpose, I, natural selection. You are supposed to want to propagate your genes above all else

…

I mean, I do believe in a creator. It’s called natural selection. There are textbooks about how it works.

I’m familiar with a different story in a different book. It’s about a creator and a creation that goes off the rails rather quickly. And it certainly strikes me that a less able creator (humans) create something that behaves in ways that diverge from the creator’s intent.

Of course, the ultimate creator I mention knew of coming treachery and had a plan. So for humanity, if AI goes wrong, do we have a plan? Yudkowsky certainly suggests that we don’t.

I’m still bullish on AI as a normal technology, but there are smart people in the industry telling me there are big, nasty, scary risks. And because I don’t see AI development slowing, I find these concerns more salient today than ever before.